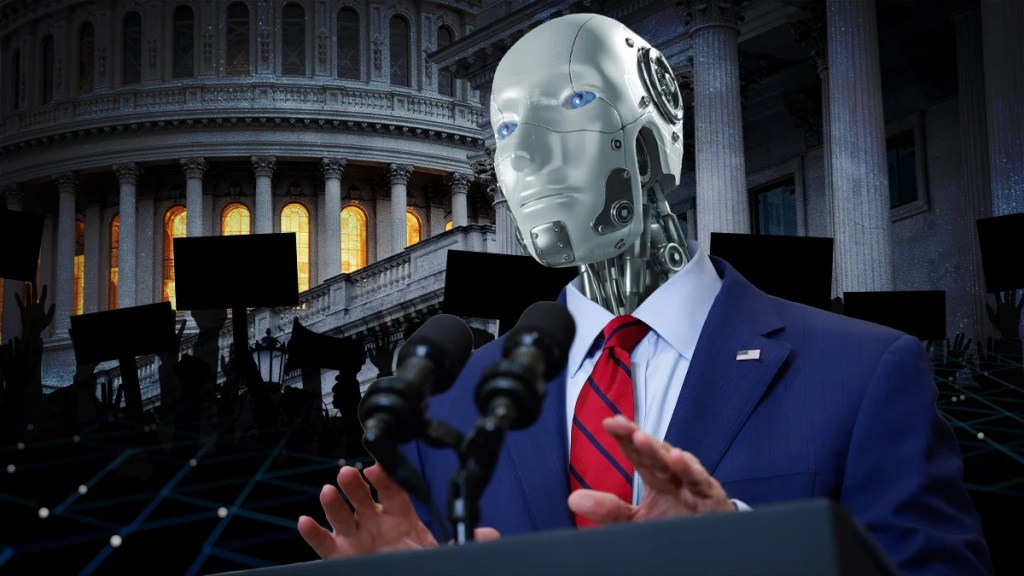

WASHINGTON — The entertainment industry is pushing for a say as Washington lawmakers draft artificial intelligence legislation aimed at establishing federal protections against the unauthorized use of AI-generated images, voices and likenesses.

The sense of urgency over AI is being felt across the industry — from the guilds to the studios to the tech companies themselves — all of whom are actively lobbying the federal government to make their voices heard.

Lobbying on AI issues has accelerated in the last year, according to analysis by OpenSecrets.org. For the first nine months of 2023, more than 350 companies, nonprofits, trade groups and others were lobbying on AI matters, the independent non-profit said. From the first to the second quarter of 2023, AI lobbying group numbers soared 82%, from 129 to 235.

“In a world where AI can clone any individual and make them say or do things they never said or did, or replicate artists to perform in creative works they never consented to perform in, a federal right in voice and likeness is urgent,” Jeffrey Bennett, general counsel for the Screen Actors Guild-American Federation of Television and Radio Artists (SAG-AFTRA), told TheWrap in a statement.

Separately, the battle over copyright holders and generative AI companies like OpenAI, creator of ChatGPT and Sora, is playing out in the courts where several high-profile cases (such as The New York Times vs. OpenAI and Microsoft) are underway. All parties, including Washington lawmakers, are waiting to see how the courts interpret copyright law and whether the unauthorized use of published content infringes on that law or is instead, as the tech companies argue, “fair use.”

But Hollywood unions say the rapidly developing nature of AI has already put creative industries on the back foot.

“We are potentially one bad court decision away from Congress being put in a position where they would have to scramble to maintain protections for U.S. copyrighted works,” Tyler McIntosh, the political and legislative director for IATSE, which represents 170,000 background and below-the-line Hollywood workers, told TheWrap.

“Otherwise, if these technologies are left unchecked, we face the next frontier of mass-scale online piracy,” he said. “Everything from feature films to commercials, you name it, could be ingested to produce AI content that directly competes with the U.S. entertainment market and provides opportunities for international actors to institute data exceptions to their laws.”

The lobbying activity comes as the industry is still recovering from last year’s months-long Hollywood strikes where AI concerns were a major part of negotiations, and as artists and performers face an ever-increasing onslaught of deepfakes. Earlier this month, images emerged of Katy Perry and Rihanna attending the Met Gala, which neither one attended. And Scarlett Johansson was livid to learn this week that OpenAI used a facsimile of her voice despite her rejecting a proposal for them to do so.

At the same time, Big Tech companies including Microsoft, Alphabet, Meta and Amazon, are spending tens of billions of dollars to advance AI technology while “orchestrating a new era of AI transformation,” as Microsoft CEO Satya Nadella said of his own company during the company’s Q3 earnings call last month.

Major Hollywood studios have also touted in-house AI initiatives, with Warner Bros. Discovery CEO David Zaslav telling analysts that finding ways to leverage AI — in part to help improve its “consumer offerings” — was one of the company’s “top priorities.”

The entertainment industry needs “better and stronger protections” for voice and likeness replication because it is seeing abuses proliferate, an entertainment attorney who was involved with the Hollywood strikes told TheWrap. For the industry, the highest priority is performance replacement, or taking an entertainer’s voice, likeness or both and creating new performances, he said.

SAG-AFTRA’s contract with the studios, signed in November, created a basic standard for ensuring consent and compensation for AI replication of an actor’s likeness or performance. But it stopped short of outright banning studios from potentially creating “synthetic performers” should the technology advance to the stage where they could be feasibly used in a Hollywood production.

New AI, new laws

While there are no federal regulations as yet addressing voice and likeness deepfakes, several bills have been introduced in Congress. Among these, the No AI Fraud Act aims to offer protections for human performers.

The legislation — short for No Artificial Intelligence Fake Replicas and Unauthorized Duplications Act — has drawn federal lobbying dollars from 21 organizations including the The Motion Picture Association (MPA), the Recording Industry Association of America (RIAA) and SAG-AFTRA.

Sponsored by Rep. Maria Elvira Salazar (R-FL), the No AI Fraud bill aims to establish a federal framework to protect an individual’s right to their likeness and voice against AI-generated fakes and forgeries.

The MPA and SAG-AFTRA are among the groups that have hired lobbyists to hit Capitol Hill on the legislation. Executives from both organizations were also among six witnesses who testified at an April 30 Senate hearing on potential companion legislation to that bill called the “No Fakes Act,” each taking decidedly different stances when it came to how broad the legislation should be.

No Fakes — short for Nurture Originals, Foster Art, And Keep Entertainment Safe — would hold people liable if they produce unauthorized digital replicas of someone in a performance and hold platforms liable if they knowingly host unauthorized digital replicas. The measure, at present only a one-page discussion draft, would exclude certain digital replicas based on First Amendment protections.

SAG-AFTRA’s Bennett said his guild “strongly supports” both the No Fakes and the No AI Fraud acts.

A spokesperson for MPA- directed questions from TheWrap about top priorities regarding legislation to comments made at the No Fakes hearing by MPA’s Ben Sheffner, senior vice president and associate general counsel for Law and Policy.

Sheffner called the No Fakes draft a “thoughtful contribution” to the debate over AI technology abuses. “However, legislating in this area necessarily involves doing something that the First Amendment sharply limits: regulating the content of speech,” he said. “It will take very careful drafting to accomplish the bill’s goals without inadvertently chilling or even prohibiting legitimate constitutionally protected uses of technology to enhance storytelling.”

Duncan Crabtree-Ireland, SAG-AFTRA national director and chief negotiator, said that the Supreme Court determined long ago that the First Amendment does not require that the speech of the press or other media be privileged over the protection of the person being depicted.

“On the contrary, the courts apply balancing tests which determine which right will prevail,” Crabtree-Ireland said. “These balancing tests are critical, and they’re incorporated into the discussion draft of the NO FAKES Act.”

Separately, the Recording Industry Association of America (RIAA), which represents music labels, has been vocal in support of the No AI Fraud Act as a “meaningful step towards building a safe, responsible and ethical AI ecosystem.”

An RIAA spokesperson told TheWrap in a statement that the group applauds efforts to protect people against deepfakes and voice clones with legislation including the No AI Fraud Act as well as Tennessee’s newly passed state law, the Ensuring Likeness Voice and Image Security (ELVIS) Act. RIAA is also participating in discussions concerning the Senate’s No Fakes Act draft, she said.

“We are actively urging policymakers to reject lobbying for overbroad categorical exclusions in these bills that would only create more victims, including so-called ‘expressive works’ loopholes that would allow for the misappropriation of artists’ voices, images and likenesses digital replicas,” the spokesperson said, adding that companies that violate this should be held accountable.

“More broadly, we prioritize the need for consent from artists and rightsholders before their work is ingested in AI models, a free-market licensing system that provides fair compensation, and transparent recordkeeping that ensures artists know when and how their work has been used,” the RIAA spokesperson said. Artists, she said, should receive credit and attribution for the use of their works in AI models.

Another bipartisan bill the industry has eyes on is the Preventing Deepfakes of Intimate Images Act, the entertainment attorney said. It is important as the use of deepfake pornographic and sexualized imagery is growing, affecting everyone from celebrities to high school students.

Introduced in January by Rep. Joe Morelle (D-NY), the bill seeks to prohibit non-consensual disclosure of digitally altered intimate images by making the sharing of these images a criminal offense.

“Deepfake pornography is sexual exploitation, it’s abusive, and I’m astounded it is not already a federal crime,” Morelle said in a statement. “My legislation will finally make this dangerous practice illegal and hold perpetrators accountable.”

More recently, the Protecting Americans from Deceptive AI Act, a House bill introduced last month, would require that the National Institute of Standards and Technology establish a task force to develop standards and guidelines relating to identifying generative-AI content. The bill would also require that audio and video content created or substantially modified by generative AI be clearly labeled, and that tech platforms use those labels on such content.

Reps. Anna G. Eshoo (D-CA) and Neal Dunn (R-FL) introduced the legislation, which received support from several groups including the Authors Guild and the Society of Composers & Lyricists.

While people on all sides of the AI issue are heavily spending on lobbying, “the interests of the generative AI companies are being very effectively lobbied,” an attorney who focuses on AI and digital media told TheWrap. This, he added, “is one reason why we’re probably not going to see any significant AI legislation this year.”

Jeremy Fuster contributed reporting to this article.

The post Industry Scrambles for Influence in Washington As Lawmakers Weigh AI Restrictions appeared first on TheWrap.