OpenAI has unveiled a generative artificial intelligence tool capable of creating hyper-realistic videos, marking the San Francisco start-up’s first major encroachment onto Hollywood.

The system can seemingly produce videos of complex scenes with multiple characters, an array of different types of shots and mostly accurate details of subjects in relation to their backgrounds. A demonstration, rolled out Thursday, touted short videos the company said were generated in minutes in response to a text prompt of just a couple of sentences, though some had inconsistencies. It included a movie trailer of an astronaut traversing a desert planet and an animated scene of an expressive creature kneeling beside a melting red candle with shadows drifting in the background.

While similar AI video tools have been available, OpenAI’s system represents rapid growth of the tech that has the potential to undercut huge swaths of labor. It signals further mainstream adoption as the entertainment industry grapples with AI. A study surveying 300 leaders across Hollywood, issued in January, reported that three-fourths of respondents indicated that AI tools supported the elimination, reduction or consolidation of jobs at their companies. Over the next three years, it estimates that nearly 204,000 positions will be adversely affected.

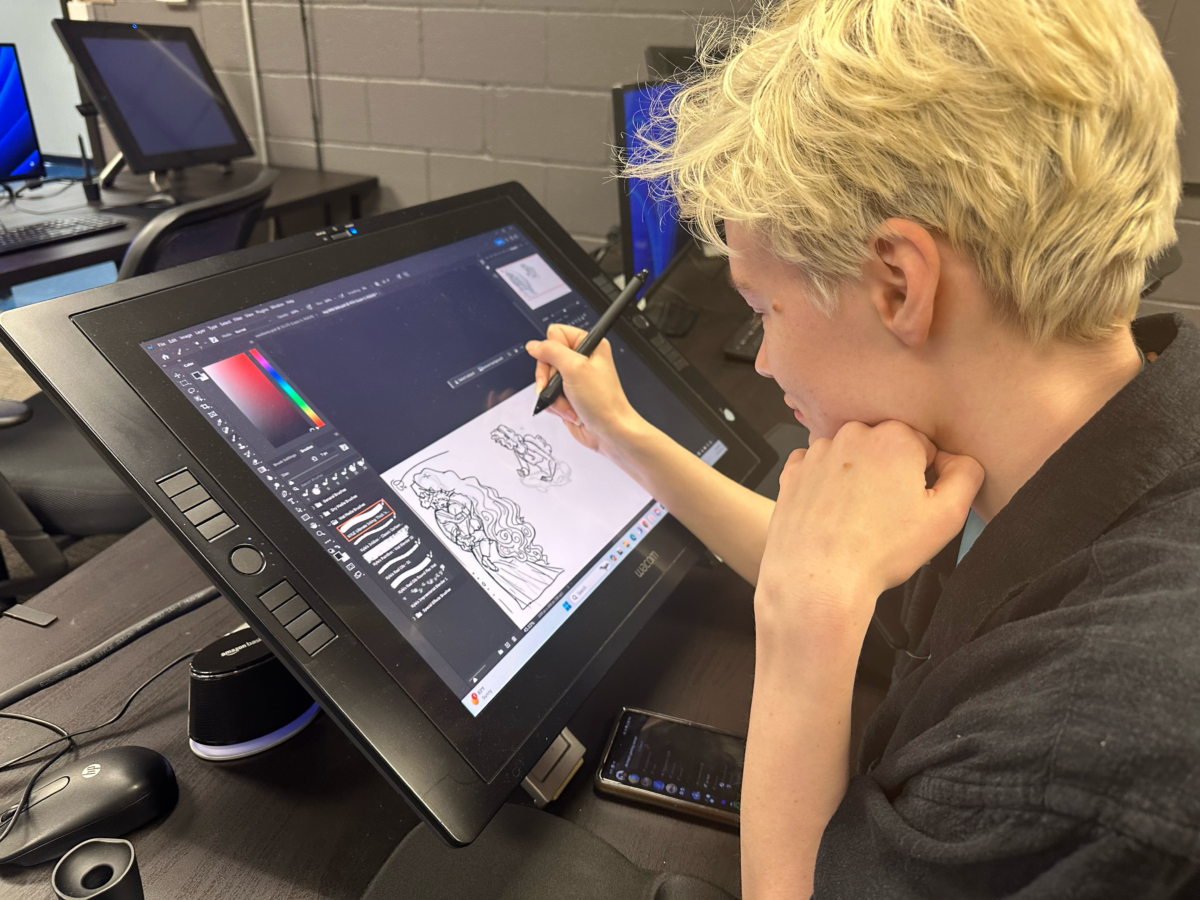

Sound engineers, voice actors and concept artists stood at the forefront of that displacement, according to the study. Visual effects and other postproduction work was also cited as particularly vulnerable. These positions, among others, are increasingly seen as replaceable by AI tools if the tech continues to advance.

“This is a clear alarm to the unions and professionals who are in crew in any capacity,” says Karla Ortiz, a concept artist who has worked on several Marvel titles. “This shows that the tech is here to compete with us. This is only the first step.”

Reid Southen, another concept artist who is credited on The Hunger Games, Transformers: The Last Knight and The Woman King, stresses that the many of his peers are seeing diminished demand for their work.

“I’ve heard a lot of people say they’re leaving film,” he says. “I’ve been thinking of where I can pivot to if I can’t make a living out of this anymore.”

Whereas most AI video generators are limited by the length of videos they can produce, which often have issues with extra limbs or demonstrating real world physics, OpenAI’s tool, called Sora, appears to come close to generating content of up to a minute long that maintains visual quality and consistency while adhering to users’ prompts. It allows for the switching of shots, which include close-ups, tracking and aerial, and the changing of shot compositions.

Still, there are shortcomings. In a video of a woman walking down a Tokyo street, the subject’s legs switch sides midway through the clip. The lapels on her jacket also appear to change between a wide shot and close-up.

“The current model has weaknesses,” OpenAI said in a blog announcing the tool. “It may struggle with accurately simulating the physics of a complex scene, and may not understand specific instances of cause and effect.”

It added, “The model may also confuse spatial details of a prompt, for example, mixing up left and right, and may struggle with precise descriptions of events that take place over time, like following a specific camera trajectory.”

Sora will not be released to the public as it undergoes safety testing. It has been made available with experts in misinformation, hateful content and bias, as well as visual artists, designers and filmmakers, for feedback on improvements.

OpenAI also said it’s building tools to help detect misleading content generated by Sora. It plans to embed metadata in videos produced by the system that can identify them as being created by AI. Additionally, the tool will reject prompts that are in violation of usage policies, including those that request extreme violence, sexual content, celebrity likenesses or the intellectual property of others.

The company did not disclose the materials used to train its system. It no longer reveals the source of training data, attributing the decision to maintaining a competitive advantage over other companies. The firm has been sued by several authors accusing it of using their copyrighted books, the majority of which were downloaded from shadow library sites, according to several complaints against the company. Some of these suits have the potential to force OpenAI to destroy systems that were trained on copyrighted material if successful.

In a sign that AI may be quickly evolving using copyrighted material, AI image generator Midjourney started to return nearly exact replicas of frames from films after its latest update released in December. When prompted with “Thanos Infinity War,” Midjourney — an AI program that translates text into hyper-realistic graphics — produced an image of the purple-skinned villain in a frame that appears to be taken from the Marvel movie or promotional materials. It could also seemingly replicate almost any animation style, generating characters from an array of titles, including DreamWorks’ Shrek and Pixar’s Ratatouille.

“It’s going to demolish our industry,” Ortiz says. “Everybody is pitching projects using generative AI now. We’re already getting screwed.”

Commercial production may be among the main casualties of AI video tools as quality is considered less important than in film and TV production. In Los Angeles, shooting of commercials has mostly continued to decline since 2018, reaching a seven year low in 2023 not accounting for 2020 when the pandemic shuttered most productions.